PowerShell 7 ForEach-Object -Parallel Memory Consumption

Posted on March 09, 2020

- and tagged as

- powershell

I’m undertaking a project where one of of the tasks involves processing some 350,000 XML files. If each file takes one second to process that’s just over 4 days, which is not a particularly appealing length of time. I’ve been working on improving performance by implementing concurrency, and with the recent release of PowerShell 7 I decided to check out the new ForEach -Parallel functionality which greatly simplifies running processes in parallel.

As is the norm, few things are as easy as one would hope. The issue I faced was ever increasing memory consumption apparently due to Get-Content being called within the ForEach-Object scriptblock. As more files got processed, PowerShell would consume (and not release) memory. The first time I left the process going overnight I woke up to a server in a rather poor state, with most of the RAM (16GB) consumed by the pwsh.exe process. So I dug a little deeper and did some testing.

Let’s begin with a few notes on the testing process and defining key outcomes.

PowerShell 7 ForEach -Parallel Memory Usage Testing

Key Metric

The primary metric we’re interested in is memory consumption - not execution time. All of the heavy file processing that benefits from concurrency has been stripped out of the test code to eliminate potential causes of memory consumption. Essentially all that is left is Get-Content. This is important because some of the graphs may show better performance with no multithreading, but this is due to the stripped down code.

Number of Items

While I have ~350,000 files to process, the testing was limited to 5000 files. This provides an adequate sample to get the data we need, and means I get to complete the testing in a reasonable time frame.

File properties

The files in question are small, ranging from 3KB to 400KB.

The way to load an XML file in PowerShell is via [xml](Get-Content $PathToFile), however, to eliminate XML processing as the cause the testing will simply call Get-Content $PathToFile.

Variables referenced

For the sake of brevity, the testing code will contain a few common variables that I don’t want to define each code block. Here they are.

# The path to the XML files

$FileRoot = 'E:\XMLFiles'

# An array containing filenames of the first 5000 files

$FilesToProcess = (Get-ChildItem $FileRoot | Select -First 5000).NameTesting

Each test (apart from one, more on that in the results) was ran 3 times. In reality, much more testing was done, but only a limited number of tests had data recorded for the purpose of this post.

Each test will show the code used, followed by a graph where the Y axis shows the working memory of the PowerShell process, and the X axis shows the elapsed seconds since test execution began.

A Baseline Using PowerShell 5.1

It’s important to compare our PowerShell 7 results to PowerShell 5.1, so let’s establish a 5.1 baseline now.

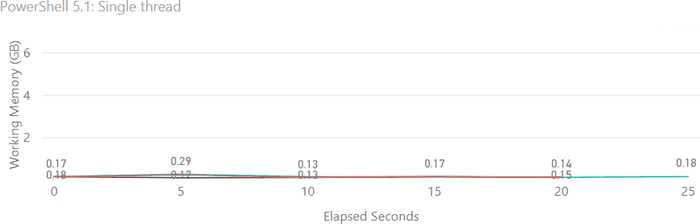

PowerShell 5.1, No Multithreading

$FilesToProcess | ForEach-Object {

Write-Host "$((Get-Date).toString()): $_"

$Data = Get-Content "$FileRoot\$_"

}Memory usage is very low, and execution time to open all 5000 files is between 20 and 25 seconds.

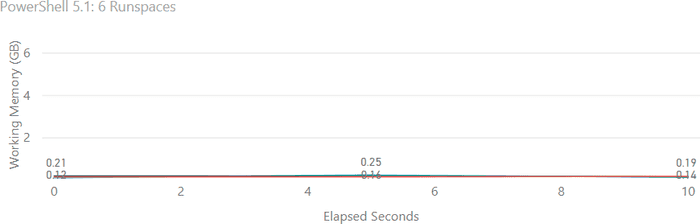

PowerShell 5.1, 6 Runspaces

Runspaces are what I wished to move away from due to the need for so much boilerplate.

$Worker = {

param($Filename)

Write-Host (Get-Date).toString()

$Data = Get-Content "E:\Products\$Filename"

}

$MaxRunspaces = 6

$RunspacePool = [runspacefactory]::CreateRunspacePool(1, $MaxRunspaces)

$RunspacePool.Open()

$Jobs = New-Object System.Collections.ArrayList

foreach ($File in $FilesToProcess) {

Write-Host "Creating runspace for $File"

$PowerShell = [powershell]::Create()

$PowerShell.RunspacePool = $RunspacePool

$PowerShell.AddScript($Worker).AddArgument($File) | Out-Null

$JobObj = New-Object -TypeName PSObject -Property @{

Runspace = $PowerShell.BeginInvoke()

PowerShell = $PowerShell

}

$Jobs.Add($JobObj) | Out-Null

}

while ($Jobs.Runspace.IsCompleted -contains $false) {

Write-Host (Get-date).Tostring() "Still running..."

Start-Sleep 1

}Execution time has improved (but remember, we don’t particularly care about that here), and memory usage is still acceptable.

Processing the same files with PowerShell 7

PowerShell 7, No Multithreading

Let’s again begin with a single threaded baseline, this code is identical to the PowerShell 5.1 single threaded test.

$FilesToProcess | ForEach-Object {

Write-Host "$((Get-Date).toString()): $_"

$Data = Get-Content "$FileRoot\$_"

}Memory usage is a little higher than PowerShell 5.1, but still very acceptable.

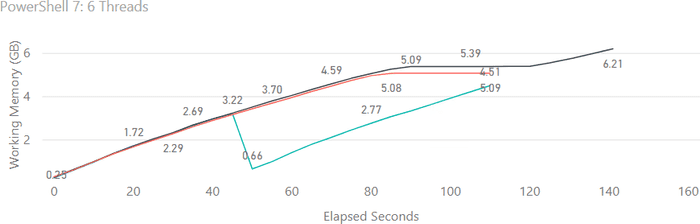

PowerShell 7, ForEach-Object -Parallel with 6 Threads

This is where things start to get a little crazy.

$FilesToProcess | ForEach-Object -ThrottleLimit 6 -Parallel {

Write-Host "$((Get-Date).toString()): $_"

$Data = Get-Content "$using:FileRoot\$_"

}We’ve gone from ~400MB working memory, to approx 5-6GB. You will notice sharp drop in memory consumption in one of the tests at around the 50 second mark. I would suspect this is garbage collection kicking in, but it is definitely not consistent.

The execution time has also gone from ~20 seconds with a single thread to 120 seconds. This is where we need to ignore the time taken as all of the heavier processing that warrants multithreading has been stripped away.

I should note that while our sample size is 5000 files, the memory consumption does keep increasing as we increase that number - I did not observe a point where it stabilises.

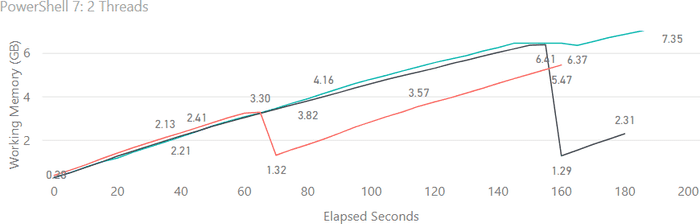

PowerShell 7, ForEach-Object -Parallel with 2 Threads

I figured one possible cause of the memory usage would be that we’re running multiple runspaces under the hood, and multiple sources have written that there are overheads involved.

This doesn’t explain the stark difference between PowerShell 5.1 runspace memory consumption and PowerShell 7, but I wanted to explore it nevertheless.

I wanted to see if memory consumption would be smaller if we reduced the number of threads.

$FilesToProcess | ForEach-Object -ThrottleLimit 2 -Parallel {

Write-Host "$((Get-Date).toString()): $_"

$Data = Get-Content "$using:FileRoot\$_"

}Unfortunately reducing the number of threads did not have an impact on memory consumption, and our runtime increased by some 60 seconds.

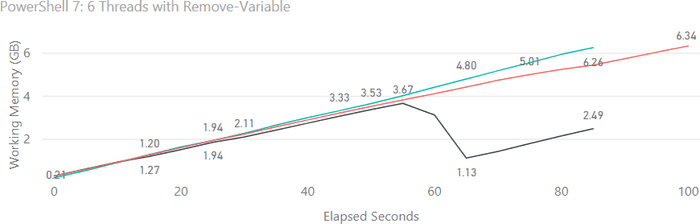

PowerShell 7, ForEach-Object -Parallel With 6 Threads and Remove-Variable

I wanted to encourage garbage collection to take place without explicitly calling it, so I tried using Remove-Variable to effectively ‘delete’ the $Data variable.

$FilesToProcess | ForEach-Object -ThrottleLimit 6 -Parallel {

Write-Host "$((Get-Date).toString()): $_"

$Data = Get-Content "$using:FileRoot\$_"

Remove-Variable Data

}Memory consumption remained similar, but with much faster execution time. I’m not sure how to explain this improvement in speed, so please drop me a line in the comments if you have some ideas.

I also tried setting $Data to $null with memory consumption remaining largely unchanged.

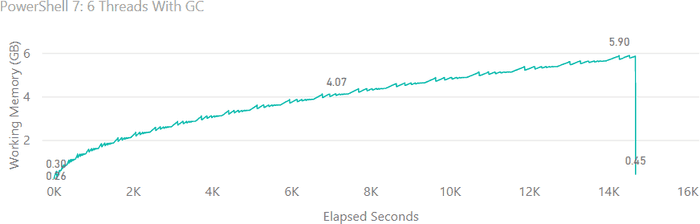

PowerShell 7, ForEach-Object -Parallel with 6 Threads and Manual Garbage Collection

I tried to avoid manually running GC because of the performance impact, but decided to give it a try to see the results.

$FilesToProcess | ForEach-Object -ThrottleLimit 6 -Parallel {

Write-Host "$((Get-Date).toString()): $_"

$Data = Get-Content "$using:FileRoot\$_"

Remove-Variable Data

[system.gc]::Collect()

}This graph is a little interesting, it appears that running garbage collection inside a loop or pipeline does not function as expected. This is covered on more detail by Jeremy Saunders in this post on his website.

For completeness, I did re-run the test with [System.GC]::GetTotalMemory('forcefullcollection') | Out-Null as referenced by Jeremy, but had close to identical results as what is graphed above.

You may have also noticed the drastically increased runtime, we’re at some 14,683 seconds, which is a little over 4 hours. The performance impact of manually running garbage collection so frequently is evident.

This is the one test where I only performed a single run, I had witnessed this behavior multiple times before I started saving data for this post, and did not feel the need to spend 8 more hours running it twice more.

Like the other tests, several times I ran this without recording the data did have the occasional memory consumption drops as shown in the other graphs, and matching the other graphs, this did result in faster execution time (approximately 10,000 seconds).

It was the sudden drop in memory at the very end that provided the solution to this issue.

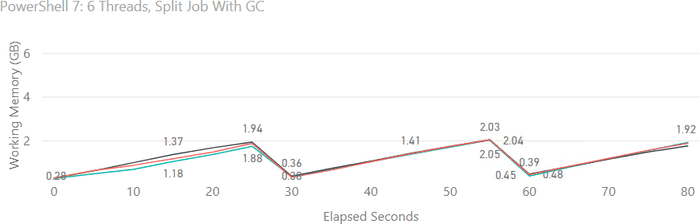

PowerShell 7, Batch Processing with ForEach-Object -Parallel Running 6 Threads

This solution is far from elegant or technically impressive, but it does get the job done. In short, we batch up groups of files to be processed, and run garbage collection after each batch.

This gives us the benefit of a manual GC while avoiding the issue of performance impact and the problem of GC not functioning during ForEach-Object -Parallel scriptblock execution.

For this test I had a look at the other results and estimated that at around 1500 files PowerShell would be consuming approximately 2GB of memory, and this was a value I found acceptable.

# Our parallel processing code is now inside a function that takes start end end array indexes as parameters

function ProcessFiles([int]$FromIndex, [int]$ToIndex) {

$FilesToProcess[$FromIndex..$ToIndex] | ForEach-Object -ThrottleLimit 6 -Parallel {

Write-Host "$((Get-Date).toString()): $_"

$Data = Get-Content "$using:FileRoot\$_"

Remove-Variable Data

}

}

$FromIndex = 0 # Arrays start at 0

$ToIndex = 1499 # Our initial batch goes to index 1499

$Increment = 1500 # We increment each batch by 1500 items

$End = $false # Bool for whether we're at the end of the FilesToProcess array

$LastItemIndex = $FilesToProcess.count - 1 # The index of the last item in the array

do {

Write-Host "[+] Processing from $FromIndex to $ToIndex" -ForegroundColor Green

ProcessFiles -FromIndex $FromIndex -ToIndex $ToIndex

Write-Host "[+] Running Garbage Collection" -ForegroundColor Red

[system.gc]::Collect() # Manual garbage collection following parallel processing of a batch

# We increment the FromIndex and ToIndex variables to set them for the next batch

$FromIndex = $ToIndex + 1

$ToIndex = $ToIndex + $Increment

# If the ToIndex value exceeds the index of the last item of the array, we set it to the last item

# and flip the $End flag to end batch processing.

if ($ToIndex -ge $LastItemIndex) {

$ToIndex = $LastItemIndex

$End = $true

}

} while ($End -ne $true)There we have it, acceptable memory usage with ok execution time.

This is one of the few graphs where the execution time is almost identical, many others had differences in the tens of seconds. The results indicate that when garbage collection does kick in it results in faster overall execution, as long as it’s not done excessively.

Finally, some additional testing I did showed faster execution with a smaller batch size. Ultimately the most optimal batch size will depend on your files, acceptable memory consumption, and performance requirements.

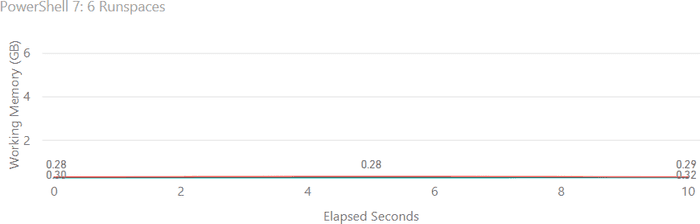

While execution time isn’t the core issue we’re tackling here, our PowerShell 7 performance still lags far behind results obtained with PowerShell 5.1 Runspaces.

The good news is there is nothing stopping us using our runspace code with PowerShell 7.

And the results are as good as with PowerShell 5.1.